Challenges in Data Labeling

The recent era has successfully onboarded the AI ship. AI has become a necessity in the global world by making everything smart and easy, self-driving cars, smart assistants, virtual mapping, etc.

But, everything has its odds! Thus, there are certain challenges that we face during the AI journey. Data is the backbone of the AI model. Collecting, Analyzing, and processing are the major steps of data handling in AI. Good AI models need Big data to perform. The more the data, the better the predictions. This big data puts large responsibility on the shoulders of data engineers. The major ones being quality and data annotations.

Bad quality data hampers the algorithm. The company may suffer huge losses due to mishandled data. There is a huge influx of data from several sources. This data needs to be labeled properly to be used effectively. The basis of an AI model lands on proper image and pattern recognition. Data labeling ensures effective pattern recognition. It’s a tedious and long task yet an important one. Thus, most of the companies are facing problems in Data labeling. Wrong labeling may incur heavy losses and in the case of self-driving cars, it can even take lives!

Let’s dig deep into the challenges faced in Data Labeling.

Challenges in Data labeling

Workforce Management

Data labeling is itself a challenge! Think about it. Tons of unstructured data ready to put up a name slip on them while segregating them into categories, species, diversity, etc! ML and AI models need Big data. To handle and label this big data you need to magnify your workforce. Not only quantity-wise but also quality-wise. This is a definite challenging task for budding businesses. There are a lot of tasks left to be performed that get delayed due to data annotation.

Hiring people and then training them, seems like a forever task. Further, the data set quantity increases every day. Inhouse data labeling can be detrimental at times!

Manage Quality flow

If you are rooting for the best results, a quality data set is your asset. You have to build up your model on quality resources. Maintaining consistency while ensuring data quality is also a must. Thus, maintaining quality consistency is a challenge in data labeling. Broadly there are two types of data.

Subjective Data

‘Subjective’ as the name suggests is not confined to a single observation. What might seem sad for one might not be sad for the other. Subjective matters are prone to change depending on the perspectives of people. Thus, subjective data needs a proper mapping of instructions.

Objective Data

The objective, on the other hand, is single-handed. If it has a label it will be for everyone without any constraint. The objective data needs domain expertise. The workforce should be experienced enough to make accurate labels. Further, manual errors put up extra pressure on them. Thus, handling data quality is havoc!

Money Matters

AI is a boon, but a single set up might cost you heavy errands. Data pattern recognition is a base step. Most companies fall apart due to errors in the initial phase. Data labeling requires proper analysis and tools, metrics, and visualizations. Inhouse labeling costs less but they turn up an expensive liability in the future.

Going for data labeling services is the best option in the long run. They ensure proper data labeling and the company can focus on other tasks effectively. Thus, enhancing productivity.

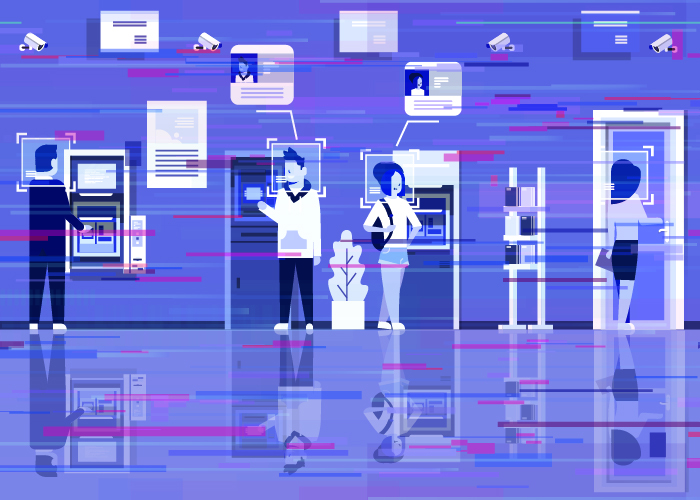

Privacy and security

Data comes from a pool of resources. Traveling through nations and cities. This data is versatile and includes personal data also. Data scraping sites might hamper the privacy of individuals. Companies need to follow international data security boards like GDPR, CCPA, SOC2, DPA. These are globally recognized standards. Further, companies also need to take care that the data is accessed on secured devices. Thus, debarring insecure transfer and use of data. Maintaining such high standards is a task. Hence, companies need to adhere to these security policies while maintaining all quality norms.

Smart tools and analytics

A lousy salesman blames his tools!

Contrary to the idiom, AI processes need certain tools. The framework lies in data annotation, pipelines, management, analysis, etc. As the company grows, the data increases and annotation is required. Large data needs smart tools to perform definite tasks. Organizations focussed primarily on data labeling own these tools. Buying these tools while doing in-house labeling may be a costly affair. Thus, in-house data labeling is not recommended. Money is a great asset and needs to be invested in many other important sectors.

Further, these tools are a necessity for organizations offering data labeling services. They upgrade the services, by providing accurate and effective results.

The foundation needs to be strong to retain the AI model. Data labeling forms the base of the model. The right data labeling will ensure an effective model. Thus, going for a data labeling service is the best call. Hence, Investing in a data labeling service is a necessity!

Automaton AI provides ADVIT, which is an AI-assisted Training data platform.

ADVIT is a revolutionary product, it provides an end-to-end data labeling solution right from data processing till hierarchical tagging.

The data undergoes a series of operations and covers all checkpoints in the QA process to achieve accuracy.

Features of ADVIT:

- Scalable

- 60% reduction per label time

- 2x less the market cost

Automaton AI has a team of top-notch AI engineers who provide full technical support. They take care of all the problems and provide statistical solutions. ADVIT is an effective product and provides the desired results. The team of Automaton AI understands the importance of data labeling. Thus, it covers all these challenges on its shoulders and you receive the labeled data!

Viola! You have to check it out https://automatonai.com/products/advit/